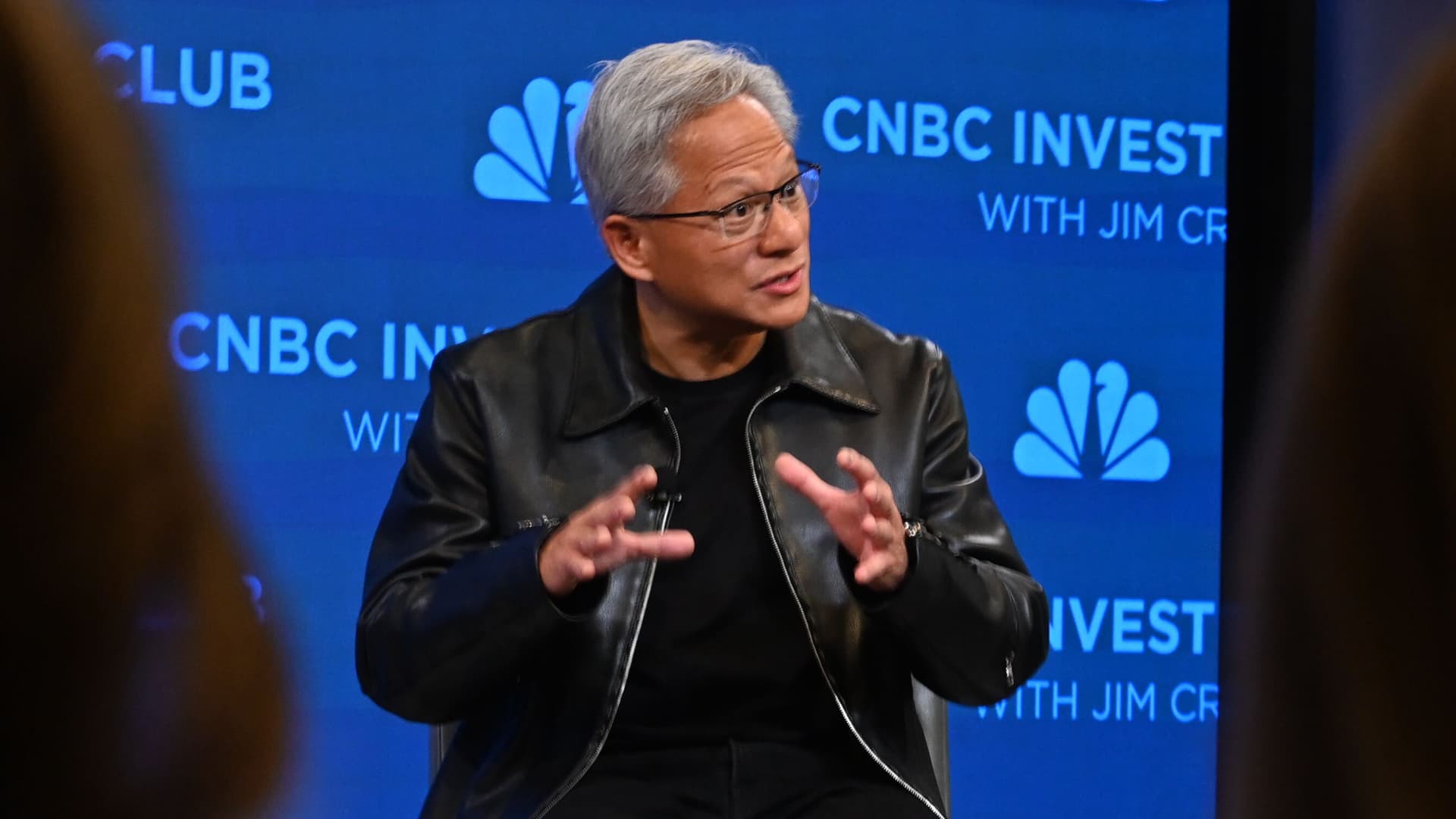

NVIDIA CEO Jensen Huang told CNBC that the companys new deal with OpenAI, announced in late September 2025, is different from past supplier contracts. Central to the announcement is a commitment to deploy at least 10 gigawatts of NVIDIA powered compute to support OpenAIs next generation models. That scale of compute is unusual and signals a step change in how scalable AI infrastructure will be built and delivered. Could this partnership reshape the economics of AI and accelerate AI powered automation across industries?

Background: Why this partnership matters

Demand for specialized AI compute has exploded as foundation models grow larger and more capable. Historically, hardware suppliers sold chips and systems to cloud providers and AI labs on a transactional basis. The NVIDIA OpenAI partnership 2025 is being positioned as more than a purchase order. According to Jensen Huang, it includes deep co engineering on system design, long term supply commitments, and substantial NVIDIA investment in supporting infrastructure. For businesses, that means enterprise AI infrastructure may come bundled with closer vendor collaboration and multiyear guarantees rather than ad hoc spot procurement.

Simple explanations for technical terms

- GPU accelerator: a specialized processor designed to run the math heavy tasks behind modern AI models much faster than a general purpose CPU.

- Compute capacity: here refers to the total power draw of datacenters and systems dedicated to AI workloads, a proxy for the scale of deployed hardware and AI compute efficiency.

- Co engineering: vendor and customer working together on both hardware and software design to optimize performance end to end.

Key details and findings

- Scale: NVIDIA will support deployment of at least 10 gigawatts of compute capacity for OpenAIs next generation models. One gigawatt equals 1,000 megawatts.

- Depth of collaboration: NVIDIA and OpenAI are collaborating on systems design, not just selling discrete components. That typically covers rack level architecture, cooling and power planning, and software hardware integration.

- Long term commitments: The agreement includes extended supply guarantees from NVIDIA, reducing procurement uncertainty OpenAI would face in acquiring GPUs and systems.

- NVIDIA investment: Huang said NVIDIA will invest significantly in infrastructure to make this possible, shifting some implementation risk from buyer to supplier.

Why those points matter

- Material expansion of AI compute: Deploying 10 gigawatts represents a major increase in global AI compute capacity for a single customer. This will enable faster experimentation and deployment of larger models and services, supporting enterprise AI adoption at scale.

- Faster commercial rollout: With dedicated capacity and vendor engineering support, OpenAI and its customers can expect shorter lead times for model training and inferencing. That lowers the barrier for businesses to integrate powerful AI features into products and workflows.

- Competitive pressure on suppliers: Long term, large scale commitments like this concentrate demand and could sharpen the advantage of suppliers that can offer end to end AI infrastructure solutions. Competing chipmakers and system integrators will likely need to match both supply reliability and co engineering offerings.

- Operational and energy implications: Adding gigawatts of compute has consequences for power provisioning, cooling, and datacenter siting. Expect a shift in where and how cloud and AI capacity is located, and increased focus on energy efficiency for next generation data centers.

Implications and analysis for enterprises

For enterprise technology leaders, the NVIDIA OpenAI deal is consequential in several ways:

- Deeper strategic vendor relationships: More companies may prefer long term, co engineered agreements over one off hardware purchases to secure capacity and performance. Building topical authority around enterprise AI infrastructure will be important for vendors and buyers alike.

- Acceleration of automation: Greater access to large scale models can accelerate automation initiatives across finance, health care, manufacturing, and other sectors that rely on large language models and multi modal AI services. This can translate into faster process automation and new product capabilities.

- Risk concentration: While the deal reduces procurement risk for OpenAI, it also concentrates influence among a small set of suppliers. That raises strategic and regulatory questions about resilience and market power.

- Talent and operational shifts: Organizations adopting large scale AI will need expertise in systems integration, model governance, and energy management, not just data science. Planning for scalable AI infrastructure and GPU compute operations will be a priority.

Practical takeaways and SEO conscious guidance

Companies planning to adopt or scale AI should reassess procurement strategies. Securing access to large scale model capabilities may require more collaborative vendor arrangements, earlier capacity planning, and investment in systems level skills. Smaller organizations should evaluate partnerships with cloud providers offering managed access to these large models if direct capacity procurement is infeasible.

From an SEO perspective, enterprise technology teams and content strategists should focus on semantic intent mapping and conversational keywords. Create content clusters that demonstrate topical authority around scalable AI infrastructure, enterprise AI infrastructure, AI compute solutions, and the NVIDIA OpenAI partnership 2025. Use question based formats such as "How does GPU compute power impact AI automation in enterprise" and resources that optimize for voice and AI driven search.

Conclusion

The NVIDIA and OpenAI agreement notable for its scale, co engineering focus, and long term commitments is a signpost for how enterprise AI infrastructure will be sourced and delivered in the coming years. It promises faster rollout of powerful AI services and sharper competition among suppliers, but also concentrates operational and market risk. Businesses should prepare for an era where winning in automation depends not only on models, but also on the systems and supplier relationships that power them. How regulators, competing vendors, and customers respond to compute concentrated at this scale will shape the next phase of AI driven enterprise transformation.