Nvidia and OpenAI move from supplier relations to deep co deployment and co optimized systems, planning at least 10 gigawatts of datacenter capacity. The partnership accelerates model training, increases demand for GPU technology and reshapes the AI infrastructure landscape.

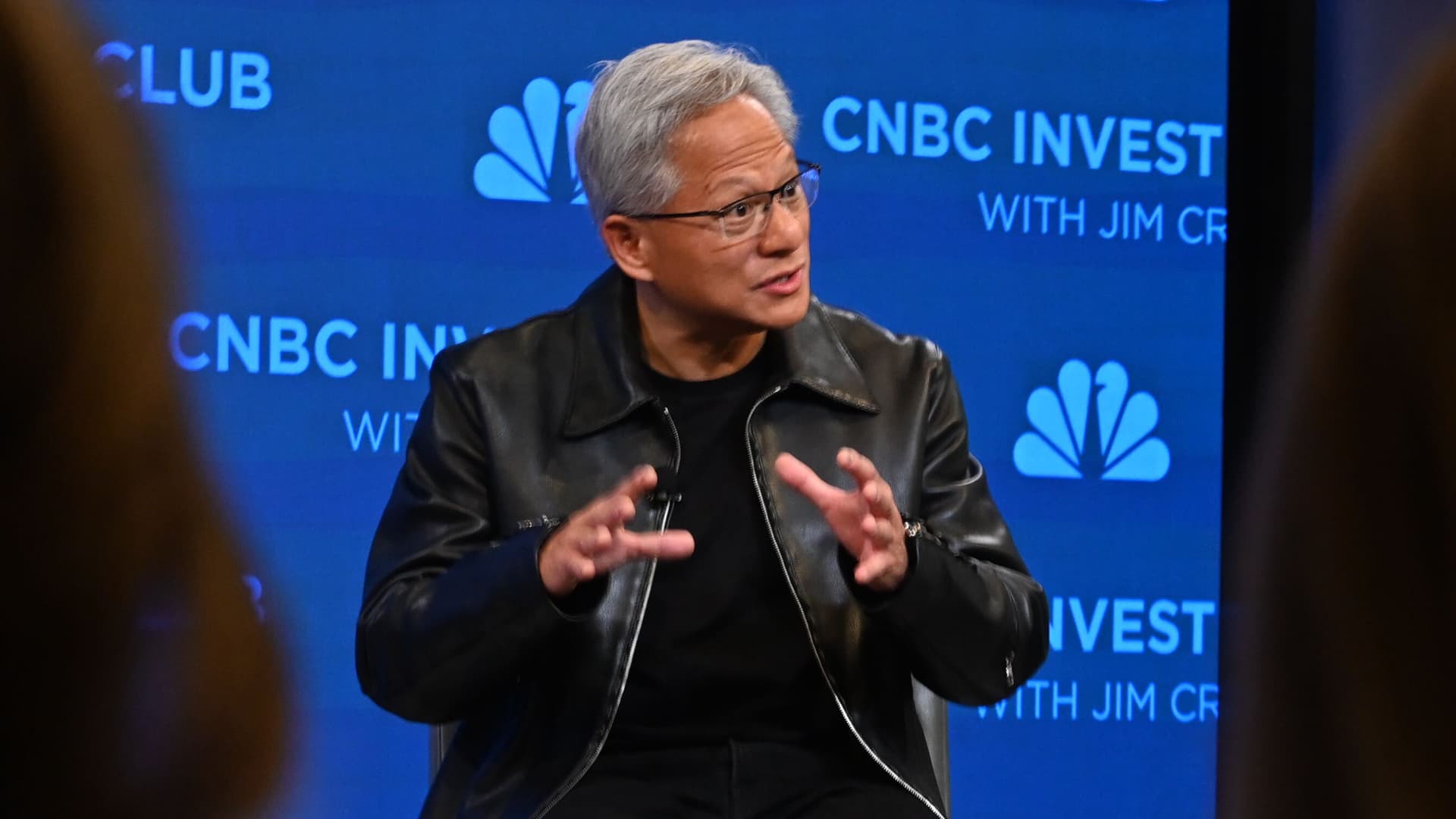

Nvidia and OpenAI announced a strategic partnership that shifts the relationship from a traditional vendor customer model to deep co development and co deployment of AI infrastructure. In a CNBC interview, Nvidia CEO Jensen Huang explained the companies will not only supply chips but also work together to deploy full systems at massive datacenter scale. Discover how this move could change AI infrastructure, datacenter efficiency and the economics of training large models.

Historically, AI labs bought AI hardware, racks and software from vendors and then integrated components themselves. That model is changing because modern large language models and multimodal systems demand more compute and tighter coordination among chips, firmware and training software. The Nvidia and OpenAI partnership addresses three chronic challenges for large model training:

Reporting on the deal and the CNBC interview highlighted several concrete elements of the arrangement. Explore these points to understand the strategic impact.

To make this accessible, here are a few key concepts. Co optimized hardware and software means designing chips and system software together so training workloads run faster and cost less. Vendor lock in refers to the risk that a customer becomes dependent on a single provider s combination of hardware and software, which can make switching costly or difficult later.

Uncover how the partnership may reshape competition, costs and governance in AI infrastructure.

By co deploying validated stacks at massive scale, OpenAI could shorten training cycles for larger models and accelerate capability improvements. However, gigawatt scale deployments imply very large upfront capital and operational costs. Reports of potential investments in the tens of billions underscore the financial magnitude and the strategic choice between speed and flexibility.

Nvidia moves from being a component supplier to a strategic infrastructure partner. That raises the risk of vendor lock in for major AI labs and enterprises if optimal performance requires Nvidia specific systems and tooling.

Cloud operators and alternative chip makers may respond by offering stronger co development services, integrated managed deployments or price incentives. The market could shift from selling raw compute to selling integrated, validated stacks that reduce deployment risk.

Large joint deployments will attract regulatory and customer attention on resilience, transparency and geopolitical supply chain risks. Stakeholders will likely push for clearer standards on auditability and performance claims.

As hardware and software become more tightly coupled, demand will grow for engineers who can bridge system design, firmware and machine learning training pipelines. Operational roles tied to rack level tuning may consolidate under specialized deployment teams.

This development fits broader trends toward platform plays where providers offer end to end solutions rather than discrete parts. Companies that control both the stack and the deployment pipeline can extract more value and bear greater responsibility for outcomes. Discover how this aligns with automation trends and the growing importance of integrated AI infrastructure.

Key signals to monitor include how competitors and cloud providers respond, whether industry standards for performance and auditability emerge, and how regulatory scrutiny evolves around large scale AI deployments. Explore industry commentary and vendor announcements for signs of shifting competitive dynamics and new managed service offerings.

For teams evaluating AI infrastructure options, explore trade offs between integrated partnerships and flexible multi provider approaches. Learn more about datacenter efficiency, AI hardware choices and how to plan for long term resilience.