Jensen Huang frames a deeper Nvidia and OpenAI collaboration that could deploy about 10 gigawatts of Nvidia powered datacenter capacity and involve up to 100 billion dollars in multi year support to accelerate scalable AI compute and faster LLM deployment for enterprises.

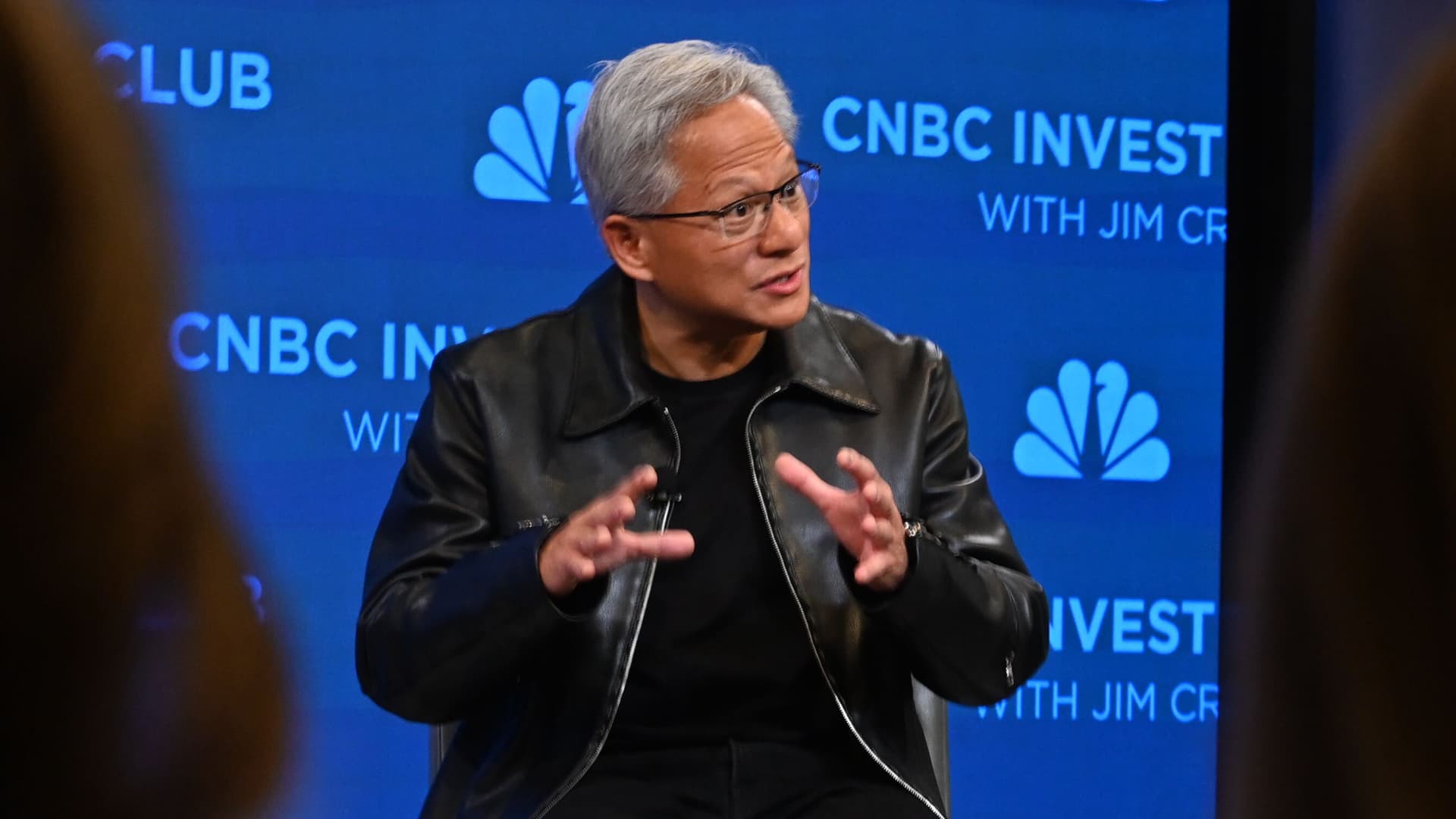

In a CNBC interview with Jim Cramer, Nvidia CEO Jensen Huang described the companys new agreement with OpenAI as more than a typical sale. Published reporting says the arrangement could deploy about 10 gigawatts of Nvidia powered datacenter capacity for next generation models and involve up to 100 billion dollars in multi year commitments. The partnership aims to accelerate scalable AI compute by co designing hardware software and models to unlock faster, lower latency services for enterprise AI integration.

Large language models and advanced generative AI need thousands of specialized processors and tightly tuned software stacks to perform at scale. Building that capability requires next generation AI hardware, skilled engineering, and datacenter power at hyperscale levels. The Nvidia and OpenAI collaboration is positioned as a shift from buying components to co designing systems that integrate chips networking and model engineering for better performance and lower cost per operation.

For companies using OpenAI powered services this collaboration could mean access to larger faster and more responsive models. That can transform automation workflows improve text and code generation quality and enable new real time features.

At market level a large buyer securing dedicated capacity can tighten supply and influence cloud AI platforms pricing and availability. This dynamic may accelerate vertical integration as major AI providers secure preferred supply chains and scalable AI compute.

Co designing systems with Nvidia may give OpenAI performance and cost advantages that are hard for smaller players to match quickly. That increases competitive concentration and could concentrate leading capabilities among a few firms.

Deep partnerships raise governance issues about transparency security and market fairness. Regulators and enterprise customers will likely monitor exclusivity preferential access and pricing. Organizations should evaluate vendor lock in risks and contingency plans as part of their procurement strategies.

The Nvidia and OpenAI collaboration signals a change in how leading AI systems will be built and provisioned. By committing large amounts of capacity and closer engineering collaboration the companies aim to accelerate model rollout and performance while raising questions about access cost and competition. Businesses should prepare for a landscape where advanced AI capabilities are tied to deep platform partnerships and plan cloud procurement risk management and skills development accordingly.

Editorial note: This article reflects reporting and public statements about the partnership and highlights likely impacts for enterprise AI integration and scalable AI compute.