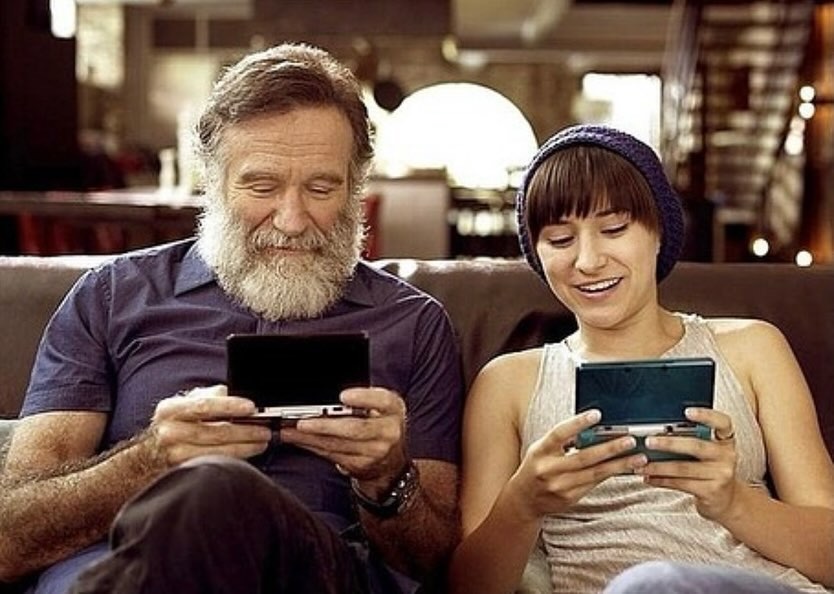

After AI generated videos and images of Robin Williams circulated online, daughter Zelda Williams called the content disgusting and urged people to stop. The case highlights deepfake technology 2025, platform policy gaps, and the need for digital likeness protection.

When AI generated videos and images recreating the late Robin Williams began circulating online, his daughter Zelda Williams spoke out, describing the content as disgusting and asking people to stop sending her the likenesses. Reported on October 8, 2025, the episode underscores how deepfake technology 2025 and AI generated celebrity videos can retraumatize families and spark heated debate about platform responsibility and legal protection for posthumous likenesses.

Advances in generative AI make it possible for hobbyists and bad actors to produce convincing synthetic media that mimics a real person voice or face. For families and estates, those tools create practical, legal, and emotional problems. Platforms face massive moderation loads. Laws on digital likeness rights management and postmortem publicity vary by jurisdiction. And surviving relatives can experience renewed grief when a recognizable voice or face is recreated without consent.

Deepfakes are synthetic media created by machine learning models that learn patterns in real footage and then generate new content that resembles the original. Consumer apps can produce funny clips, while more advanced models enable AI voice cloning and hyperreal face replication. The more data available on an individual, the more convincing the result.

This incident shows that technology alone cannot be the only answer. Platforms need better policy and tooling to balance creative expression with protection from abuse. Practical steps include clear reporting flows, optout mechanisms for estates, labeled content to preserve genAI content authenticity, and investment in deepfake detection tools 2025. Companies that provide synthetic content features should offer explicit consent workflows and easy removal services to reduce brand and reputational risk.

Current laws on using a person likeness after death are inconsistent. That legal patchwork increases uncertainty for platforms and creators. Businesses building or licensing AI driven media tools face rising regulatory risk as governments consider laws to address AI impersonation breach and AI powered digital identity theft.

Legal teams must account for posthumous publicity rights in licensing deals. Product teams should build guardrails into creative tools. Customer support operations need processes to respond quickly and empathetically to takedown requests. For media companies and brands, pre clearance processes and respectful engagement with families will be essential to avoid backlash.

Industry commentary frames Zelda Williams reaction within a wider debate about ethical AI generated content, misinformation crisis, and digital identity protection. From an SEO perspective, articles that use authoritative sourcing and show E E A T will perform better in searches about deepfakes, deepfake detection, and reputation management. Focused phrases that readers search for include deepfake technology 2025, deepfake detection tools 2025, AI generated celebrity videos, and AI voice cloning.

Zelda Williams public protest is unlikely to be a one off event. As generative AI tools become more accessible, expect more disputes over acceptable use of a person likeness, living or dead. Platforms, lawmakers, and businesses will face pressure to define clear rules and provide stronger optout and detection tools. For the public and policymakers the core question remains how to protect dignity and privacy while allowing legitimate creative use of new tools. The answer will shape the future of digital likeness protection and online reputation mitigation.