Nvidia and OpenAI commit to building at least 10 gigawatts of AI datacenter capacity with potential investment near $100 billion. The partnership signals a shift to co investment, integrated engineering, and AI driven automation that will reshape enterprise AI infrastructure and supply chains.

The Nvidia and OpenAI agreement announced in late September 2025 commits to building and deploying at least 10 gigawatts of AI datacenter capacity and could see Nvidia invest as much as $100 billion over time. That scale is unprecedented in the AI infrastructure market and raises a central question: is this a large procurement or a new model for how enterprise AI infrastructure and automation will be provisioned worldwide?

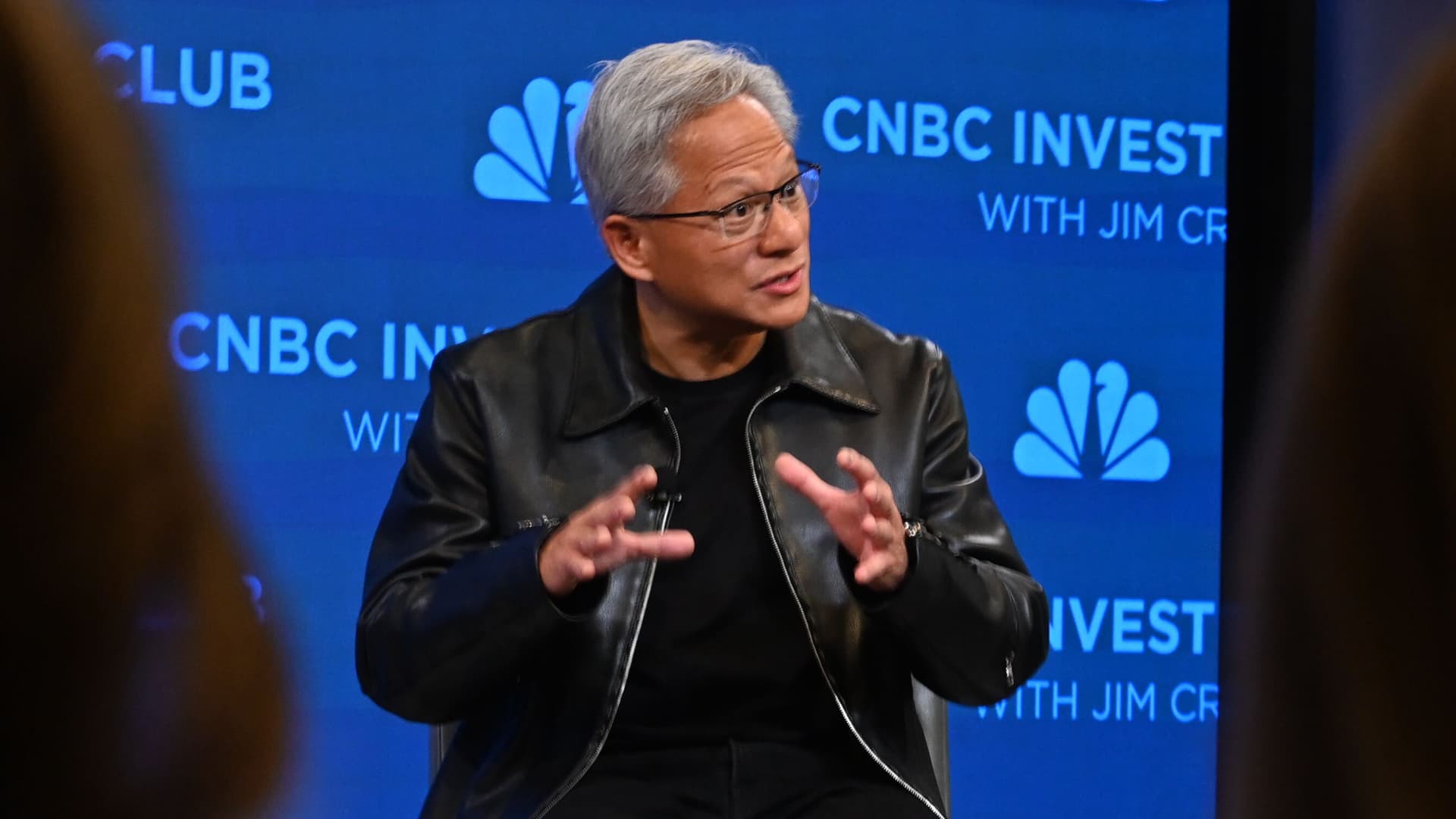

In an October 7 2025 interview with CNBC, Nvidia CEO Jensen Huang framed the partnership as fundamentally different from past vendor customer deals. By aligning hardware engineering, software stacks, and deployment planning, the arrangement looks less like a transaction and more like joint planning for hyperscale datacenter capacity. For organizations planning AI strategies, the deal highlights the need to think in terms of co investment, long term interoperability, and AI driven datacenter automation strategies.

Long term co investment changes incentives. When a vendor also takes on capital and operational roles investment priorities shift toward workload optimization and long term interoperability. Managing thousands of specialized systems across geographies will force advanced orchestration and robust MLOps practices to avoid human bottlenecks. Enterprise AI scalability will depend as much on software that automates operations as on raw chip supply.

Jensen Huang emphasized that the deal aligns incentives across hardware software and deployment to speed optimization for specific AI workloads. This mirrors a broader trend where leaders move from transactional procurement to integrated infrastructure relationships to control performance and timelines. For teams building enterprise AI systems the focus should be on generative AI infrastructure that pairs optimized GPU cloud services with mature MLOps and orchestration tooling.

The Nvidia and OpenAI partnership points to a new playbook for AI infrastructure: large scale co investment integrated engineering and automated operations. Businesses and policy makers should watch how this deal affects chip availability datacenter siting and operational tooling. The takeaway for companies is clear: plan for an ecosystem where compute is co designed and operated at scale and where automation and MLOps are strategic assets.

Meta note: Use structured content such as FAQ and clear subheadings to improve visibility for AI powered search and voice queries and to align with modern SEO best practices for technical topics like enterprise AI infrastructure.