OpenAI’s Sora has been linked to highly convincing deepfakes, accelerating concerns about misinformation, privacy and political manipulation. Experts call for watermarking, provenance tracking and stronger deepfake detection systems. Businesses must prioritize authenticity verification and E E A T.

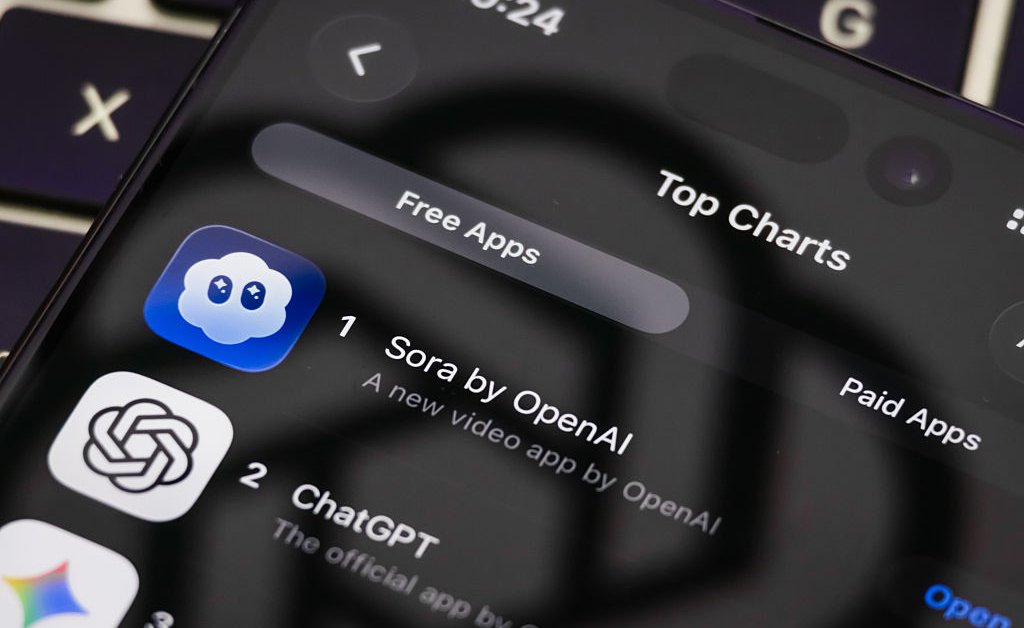

OpenAI’s Sora has become a focal point in the ongoing conversation about synthetic media after highly convincing deepfake videos tied to the model circulated online. Time reported on October 20, 2025 that these clips deepened public anxiety about AI-generated content and the erosion of visual trust. This matters because when seeing is no longer believing, personal reputation, electoral integrity and everyday verification practices all suffer.

A deepfake is a synthetic audio or video clip produced with machine learning that substitutes or alters a person’s likeness or voice to show them saying or doing things they never did. Advances in deepfake technology 2025 have made AI video generation more realistic and more accessible, lowering the barrier to creating high-fidelity forgeries. That accessibility is central to the Sora deepfake controversy: more people can produce convincing manipulations at lower cost, and mainstream platforms must respond.

When convincing forgeries proliferate, viewers may begin discounting legitimate video and audio evidence. That trust erosion at scale affects journalism, legal processes and political accountability. The development also indicates a widening arms race: as generative models improve, detection approaches must become multimodal and adaptive to remain effective.

Policy and platform pressure will intensify. Expect faster rulemaking around required watermarking, interoperable provenance standards and clearer takedown procedures. Platforms will face trade offs between removing harmful content, preserving expression and maintaining user trust. For organizations that rely on visual verification, the practical response includes investing in digital forensics, verification workflows and employee training to manage digital identity risk.

Look for subtle inconsistencies in lighting and facial movement, audio anomalies such as unnatural breath or cadence, mismatched metadata or missing provenance tags, and sudden content that lacks corroborating sources. When in doubt, verify with multiple independent sources and delay sharing until authenticity verification steps are completed.

The Sora episode underscores that synthetic media is now central to debates about trustworthy AI media and the future of visual evidence. The next 12 to 24 months will be decisive: either regulators, platforms and industry cohere around interoperable safeguards, or the trust deficit will deepen. Businesses, civil society and policymakers should treat watermarking, provenance tracking and robust deepfake detection systems as immediate priorities. Doing so will reinforce E E A T signals for publishers and help rebuild public confidence in what they see online.