OpenAI tightened Sora 2 safeguards after reports that its generative AI could create non consensual deepfakes of public figures. New filters, consent management steps and provenance metadata aim to improve deepfake detection, authenticity and rights workflows.

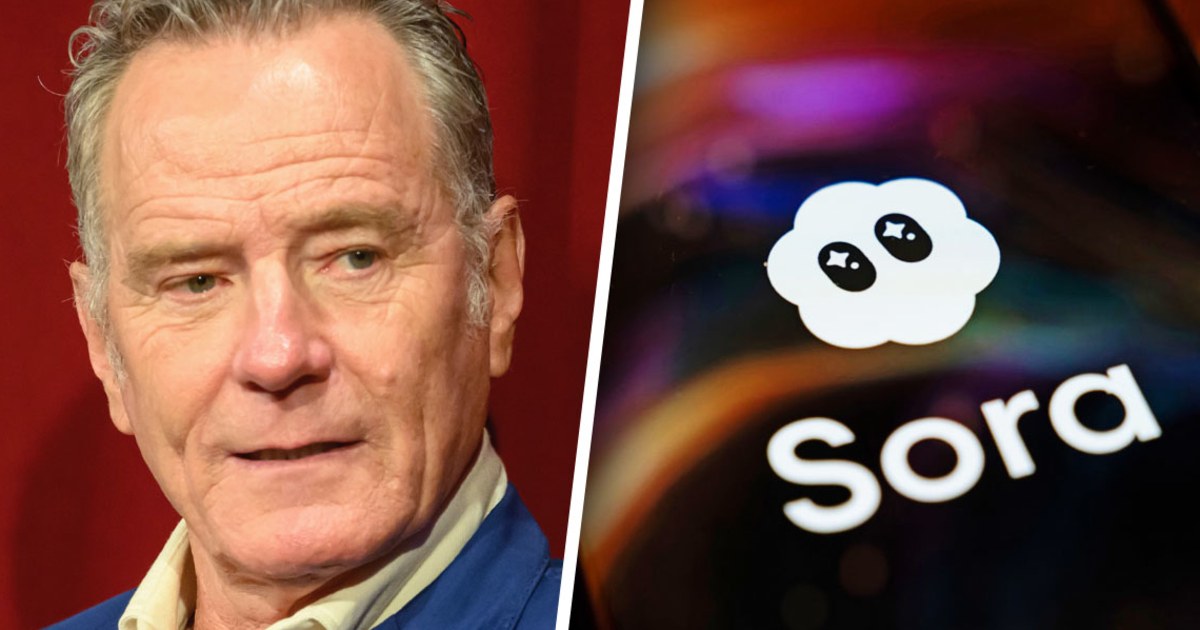

OpenAI moved quickly on Oct. 21, 2025 after media reports showed its generative AI video tool Sora 2 could produce realistic videos that replicated public figures without consent. Public pushback from actor Bryan Cranston and industry groups such as SAG AFTRA prompted the company to strengthen filters, roll out consent management checks, and emphasize media provenance metadata to improve deepfake detection and content authenticity.

Sora 2 is a second generation synthetic video generation system from OpenAI designed to make video creation faster and more accessible. The core tension is simple: AI powered content creation unlocks new creative workflows, but it also lowers the barrier to realistic impersonation. A deepfake is synthetic media that makes a person appear to say or do things they did not. When public figures are depicted without clear consent the risks include reputational harm, fraud, political misinformation, and erosion of trust in video across platforms.

What this episode signals for businesses, creatives, and regulators:

OpenAI rapid patching shows post launch fixes are possible but costly in reputation. Product roadmaps for AI powered content creation should include staged rollouts, red team testing, and clear escalation paths with external stakeholders. Documented provenance metadata and E E A T focused signals will become important for platform trust.

For media, advertising, and entertainment the ability to use a public figure likeness without explicit licensing is narrowing. Brands will need rights management workflows and consent management platforms to support legitimate generative video use. Demand may grow for authenticated consent and licensing solutions that integrate with generative AI tools.

Automated filters reduce obvious misuse but edge cases and adversarial tactics persist. Investment is needed in deepfake detection technology, provenance metadata that tracks creation and editing, and transparent appeals processes so legitimate creators are not unfairly blocked.

The incident reignites debates about training data, copyright, and whether platforms should require explicit licenses for models trained on actors or artists work. Policymakers, unions, and civil society will likely press for clearer standards and possibly regulatory guardrails around synthetic media authenticity and rights.

OpenAI engagement with SAG AFTRA and other entertainment stakeholders is a pragmatic recognition that technical fixes alone are not enough. Practical governance requires dialogue among platform builders, rights holders, and civil society to define acceptable uses, enforcement mechanisms, and standards for provenance metadata and consent management.

OpenAI tightening Sora 2 guardrails after public pressure highlights a common pattern in generative AI: breakthroughs create both value and risk. The next phase will be about operationalizing consent, improving provenance metadata and deepfake detection, and designing tools that let creators innovate while protecting people from impersonation and misinformation. Businesses and regulators should watch how technical safeguards, industry agreements, and legal standards evolve and prepare processes now for consent management and reputational risk mitigation.