Over two years Nvidia invested in more than 80 AI startups through funding and ecosystem programs, shaping AI infrastructure, model tooling and generative AI apps to favor its Nvidia AI chips and integrated AI hardware software stack while raising vendor lock in risks.

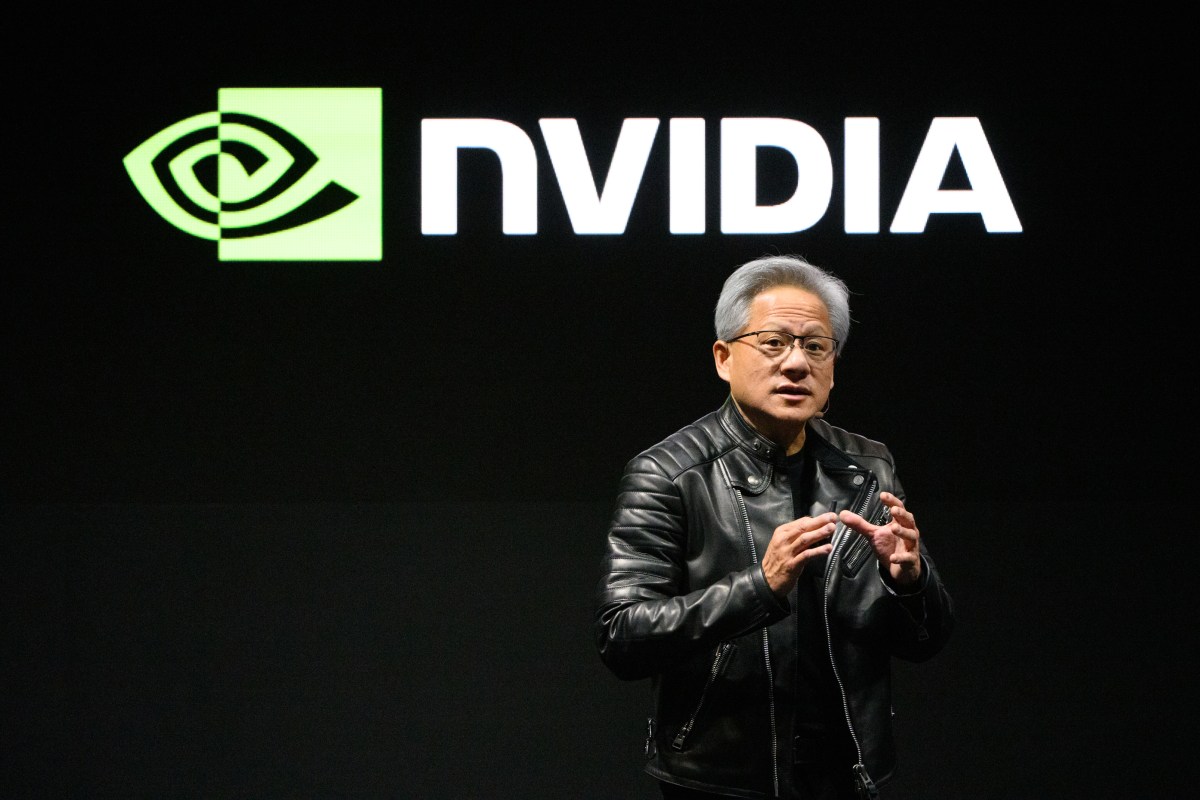

Over the last two years Nvidia has invested in more than 80 AI startups, combining direct funding and ecosystem programs to accelerate companies that depend on its chips and software. This level of AI infrastructure investment matters because it lets a single silicon supplier influence which tools and platforms gain traction across the AI stack, from model tooling to generative AI applications. Could these strategic AI partnerships speed enterprise AI adoption while also concentrating market power?

Nvidia built its position selling GPUs optimized for machine learning, and its continued lead rests on an integrated AI hardware software stack that ties Nvidia AI chips to developer tooling and cloud partners. Chips alone do not guarantee continued demand. The modern AI stack depends on models, orchestration platforms, data pipelines and cloud services that must be tuned to specific hardware. To protect and extend its advantage, the company pursues an AI ecosystem play: fund and partner with software and application startups so its platforms become the default choice.

In plain language, an ecosystem play means the chipmaker is not just selling processors but also nurturing the software and services that make those processors more valuable. That boosts adoption and increases switching costs for customers who standardize on that stack, and raises questions about vendor lock in and market concentration.

Reporting shows Nvidia's outreach is broad and strategic rather than scattershot. Highlights include:

These are not merely financial bets. They are strategic AI investments designed to lock customers into Nvidia's architecture and to accelerate downstream innovation that benefits the hardware business.

So what does this concentrated investment strategy mean for stakeholders?

On the positive side, startups that integrate closely with Nvidia gain deep hardware integration, reducing time to market and improving performance for customers. Collaboration between chip design and software teams can squeeze more efficiency out of models and enable new enterprise use cases sooner. That matters for enterprise AI strategy and for firms prioritizing AI enabled transformation.

On the trade off side, the same alignment that speeds innovation can route most benefits back to the dominant vendor. When platforms tailor libraries to their silicon, alternatives may struggle to match performance without major investment, amplifying market concentration and vendor lock in concerns.

Organizations that depend on AI should map their stack to identify which components are Nvidia optimized and which are portable. Strategies include multi vendor deployments, insisting on open standards, and negotiating portability and interoperability clauses into vendor contracts. These steps reduce single vendor dependency and help manage the AI regulatory landscape and investor scrutiny.

As AI scales, infrastructure choices influence energy consumption. Topics such as sustainable AI and energy efficient AI are increasingly important when evaluating utility scale data center projects tied to AI growth. Liquid cooled data centers and compute fabric evolution are examples of infrastructure trends that matter for both cost and environmental impact.

Nvidia's investment push across infrastructure, model tooling and generative AI applications is reshaping how AI products are built and scaled. The strategy can speed technical progress and lower barriers for product teams, but it also concentrates influence in the hands of a single platform provider. Businesses should prepare by auditing dependencies and insisting on portability where possible. As Nvidia's AI ecosystem deepens, the industry must balance the benefits of rapid innovation against the risks of excessive concentration. Will that balance favor open competition or a dominant full stack provider? That is the question to watch next.