King Charles III gave Nvidia CEO Jensen Huang his 2023 speech warning that AI is as transformative as electricity and poses societal risks. The gesture spotlights Nvidia and other AI hardware vendors in debates on compute governance, compliance, transparency and regulatory scrutiny.

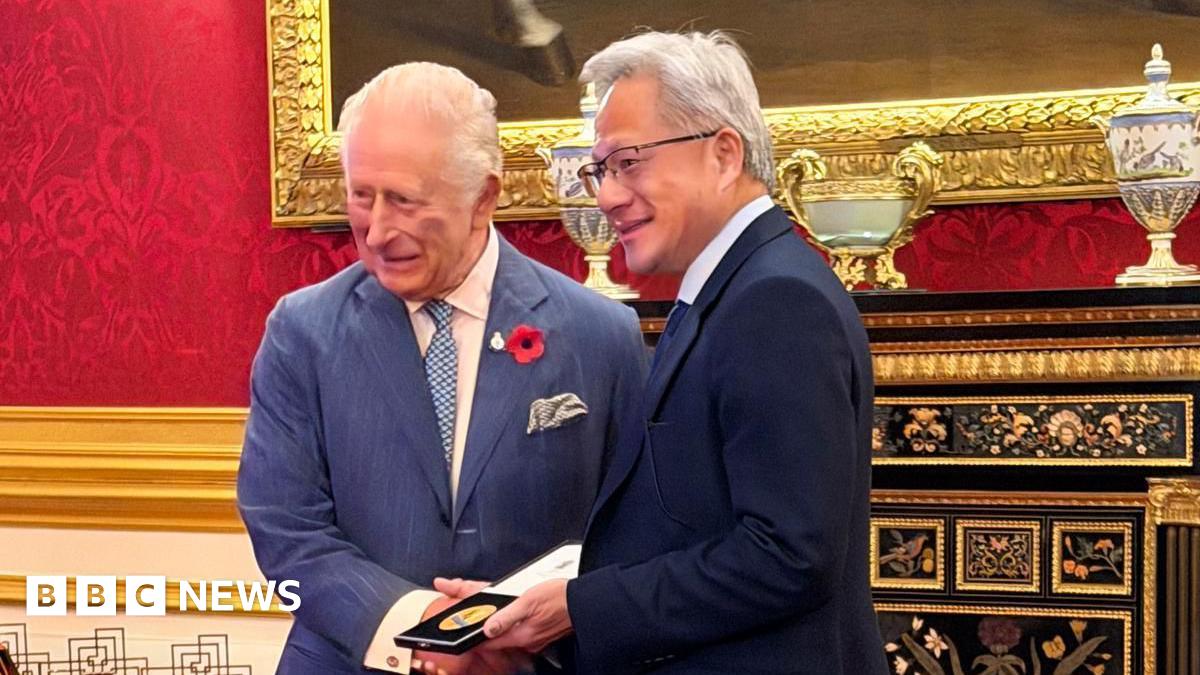

In a symbolic exchange that highlights growing concern about artificial intelligence, King Charles III handed Nvidia CEO Jensen Huang a copy of his 2023 speech that called AI "no less important than the discovery of electricity" and urged that its risks be tackled. Reported by the BBC on 6 November 2025, the moment puts the spotlight on Nvidia and other AI hardware suppliers as central actors in conversations about AI safety and compute governance.

Describing AI as comparable to electricity emphasizes how foundational compute and infrastructure are to modern systems. Because a small number of firms supply the bulk of high end processors and datacenter accelerators, calls for responsible AI now reach hardware vendors as well as model builders. That raises practical questions about compliance, risk assessment, and transparency in how advanced compute is provisioned and audited.

The King’s gesture has several concrete implications for businesses and regulators. First, hardware providers are now de facto policy actors. Governments may engage manufacturers on licensing practices, export controls, and responsible sales. Possible measures include customer vetting, usage monitoring, contractual limits on certain applications, and audit trails that show compliance with ethical standards.

Second, concentration of compute supply gives vendors both leverage and responsibility. Firms that control access to advanced accelerators can shape how research and development proceed. Reasoned governance could slow risky development while preserving safer innovation. Yet overly blunt constraints risk stifling legitimate work or shifting demand to other suppliers and jurisdictions.

Third, operational and reputational pressures rise. Public scrutiny from high level figures increases the need for clearer public commitments, stronger data governance, and demonstrable algorithmic accountability. Companies should consider establishing compliance teams, independent audits, and red team processes to assess potential misuse.

One clear insight is that control over foundational resources such as data and compute becomes a central lever in shaping responsible deployment of automation. Vendors that acknowledge their role and invest in transparency, ethical standards, and verifiable compliance will be better positioned as regulatory scrutiny increases.

The King’s act of giving his speech to Nvidia’s CEO is a reminder that AI’s risks are societal as well as technical. As governments, industry, and civil society continue to debate regulation and safety, hardware suppliers will be key interlocutors in shaping the rules that govern advanced compute. The practical challenge ahead is translating high level warnings into clear, enforceable and balanced rules that limit harms while allowing responsible innovation in AI.