Google set an internal mandate to expand AI compute capacity roughly 1,000x by 2030. The plan centers on new TPUs datacenter builds and custom chips and will affect cloud services pricing energy use and market structure.

Google has quietly set an internal mandate to expand its Google AI compute capacity roughly 1,000x by 2030. That target comes even as executives publicly warn about an AI market bubble. The juxtaposition matters: it shows Google is doubling down on Google AI infrastructure and AI supercomputing as the foundation for future cloud AI acceleration and product features.

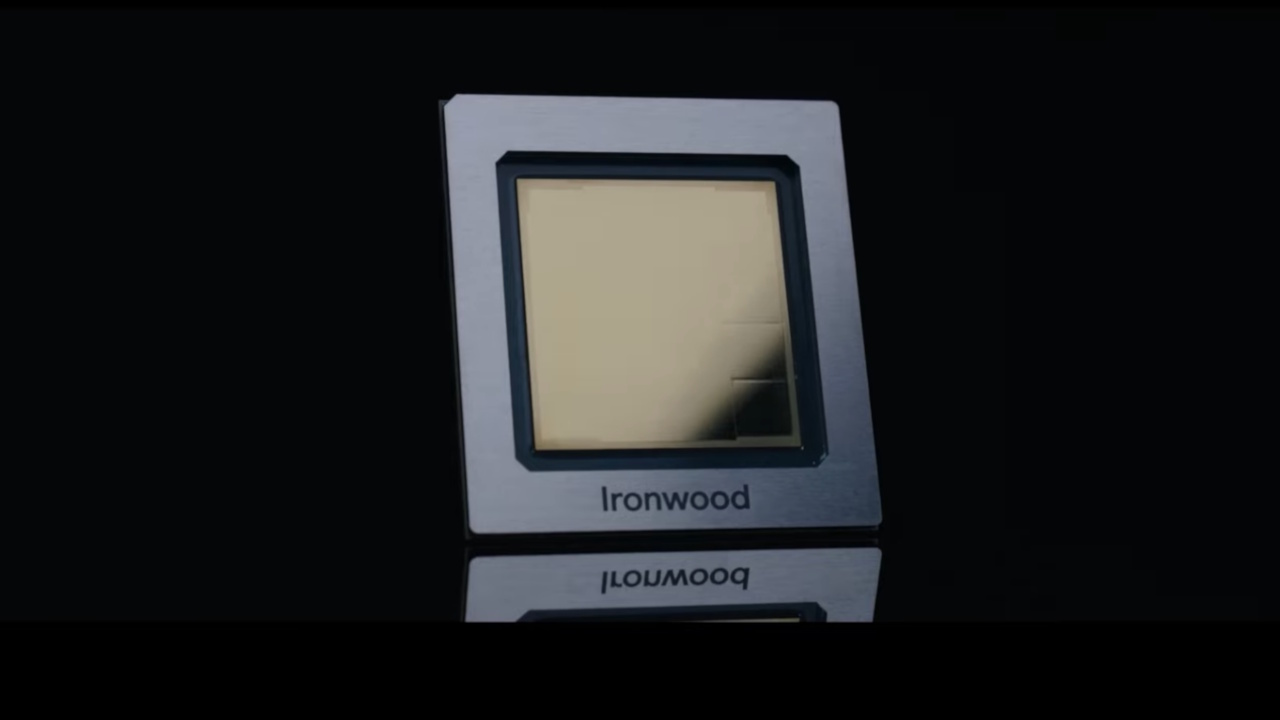

Modern AI models need huge amounts of specialized compute to train and serve users at scale. AI compute scale describes the aggregate processing power across chips servers and datacenters. Google plans to deploy successive TPU generations such as v7 and Ironwood expand datacenter capacity and invest aggressively in custom silicon. More TPU capacity means faster training of large models lower latency for real time use cases and greater availability of advanced AI services for enterprises and developers.

For enterprises and startups the mandate signals more powerful integrated AI services from Google Cloud and its product suite. Increased AI computing power can enable new real time multimodal features and reduce latency for large models. For cloud pricing and competition the effects are mixed. If Google achieves efficiency gains with custom hardware it could deliver more competitive AI compute pricing at scale. If capital and energy costs rise those expenses could be reflected in pricing models especially for peak workloads.

A 1,000x leap in compute implies a significantly larger energy footprint unless dramatic efficiency improvements or shifts to low carbon supply are deployed. This will attract attention from sustainability focused investors regulators and enterprise buyers focused on total cost of ownership and carbon impact. Concentrated investments by hyperscalers also raise questions about market structure competition and national security oversight.

Developers should expect greater access to high performance infrastructure enabling new AI driven applications. The change creates opportunities for advanced model fine tuning distributed training and integrated developer tools that leverage Google AI compute. Product teams should track cloud AI acceleration roadmaps pricing tiers and regional availability as they plan production deployments.

Google plans a combination of factors: newer TPU generations like v7 and Ironwood wider datacenter builds and heavy investment in custom chips and interconnects. The strategy emphasizes custom accelerators tuned for machine learning workloads which improve performance per watt compared to general purpose processors.

Benefits include faster training lower inference latency more reliable availability and the ability to power multimodal and real time AI features. Costs include large capital expenditure higher energy consumption and potential pricing complexity for cloud customers if efficiency gains do not fully offset scale costs.

At scale Google could reduce unit compute costs through efficiency and volume and offer competitive pricing for cloud AI acceleration. Alternatively rising capital and energy expenses could be passed to customers or drive tiered pricing for high intensity workloads. Smaller providers may find it hard to match the capital intensity required for frontier models which could deepen customer lock in with major cloud vendors.

Companies planning to adopt AI extensively should monitor Google Cloud announcements evaluate pricing and sustainability commitments and design architectures that can flex across providers. Consider hybrid deployments cost projections and vendor lock in risks when estimating total cost of ownership.

Google's internal mandate to expand AI compute capacity 1,000x by 2030 signals that compute is central to future AI products and market position. Expect more powerful AI services from Google alongside higher stakes on competition energy and regulation. For enterprise buyers the takeaway is simple: prepare for more capable cloud AI acceleration and track costs availability and sustainability disclosures closely.