California signed SB 53 on September 29, 2025, forcing the largest AI labs to publish safety protocols, risk assessments, and testing governance while adding whistleblower protections. The law raises AI transparency, compliance, and trade secret questions and could shape national AI regulation.

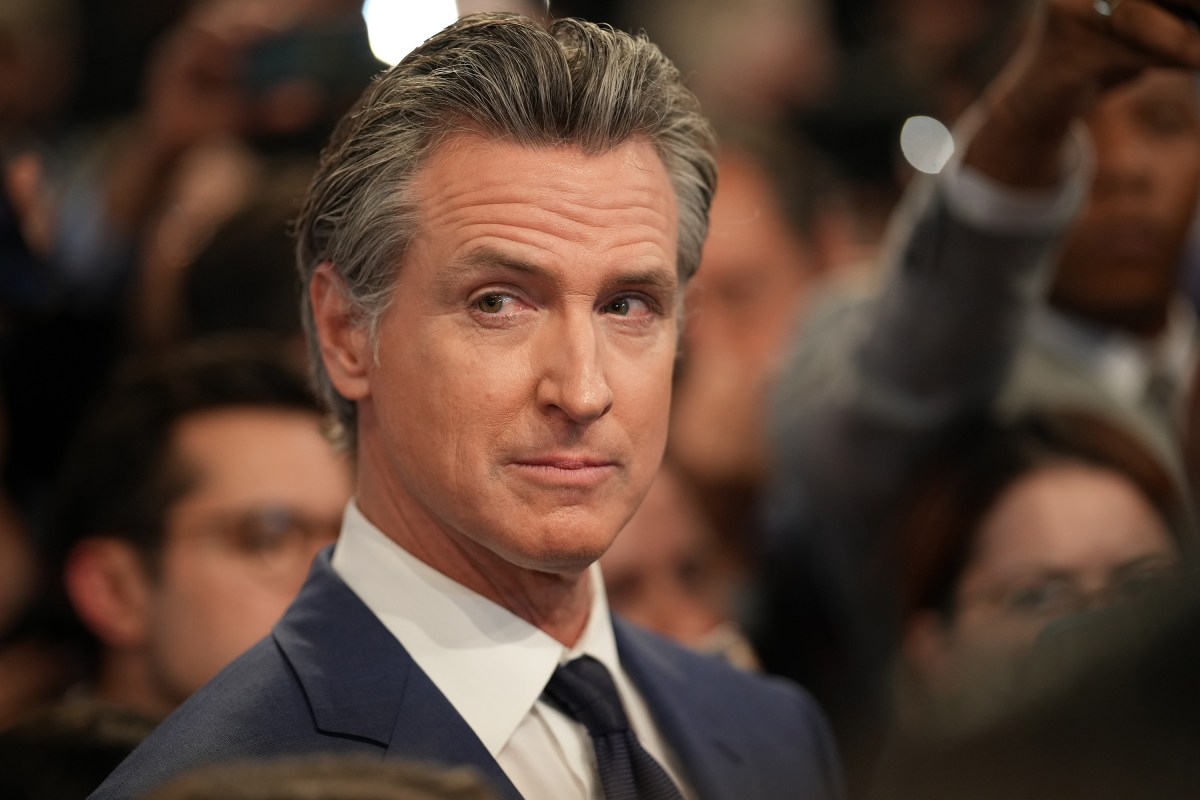

On September 29, 2025, California Governor Gavin Newsom signed SB 53, a first in the nation law that requires the largest AI labs to increase transparency about safety work and governance. The bill names major developers including OpenAI, Anthropic, Meta and Google DeepMind and mandates public disclosure of safety protocols, risk assessments, and documentation about how advanced models are tested and governed. SB 53 also strengthens whistleblower protections so employees can raise safety concerns with reduced risk of retaliation.

Policymakers, researchers, and the public have increasingly emphasized AI safety and AI governance as models grow more capable. Frontier AI models are those that demonstrate broad capabilities near or beyond human performance and that require significant data and compute. Concentration of these models in a small number of firms has driven calls for greater AI transparency, accountability, and external oversight.

SB 53 focuses on boosting AI safety and enabling meaningful review by regulators and independent researchers. Core obligations include:

SB 53 is likely to reshape AI compliance and operational processes at the largest labs. Expected effects include:

Organizations that license or integrate models from covered labs should begin updating contracts and due diligence workflows to address AI risk assessment and AI accountability. Procurement, legal, and security teams will need to consider how safety disclosures affect liability, service level expectations, and data protection obligations.

Transparency is not a cure all. Disclosures are most useful when paired with clear regulatory standards, third party review capacity, and technical methods for protecting sensitive information. The law pushes forward the broader conversation about how to achieve responsible AI with mechanisms such as AI audits, ethical AI frameworks, and independent oversight.

Who does SB 53 cover The largest AI labs as determined by model capability and revenue or size thresholds.

What must companies publish Safety protocols, risk assessments, and documentation about testing and governance to support external review and AI risk management.

Why it matters SB 53 is designed to increase public trust, enable AI oversight, and create stronger whistleblower protections so employees can safely report safety lapses.

SB 53 marks a meaningful shift in AI governance toward greater transparency, AI accountability, and stronger whistleblower protections. Businesses and researchers should prepare for new compliance requirements and engage in shaping reporting standards that protect both safety and innovation.