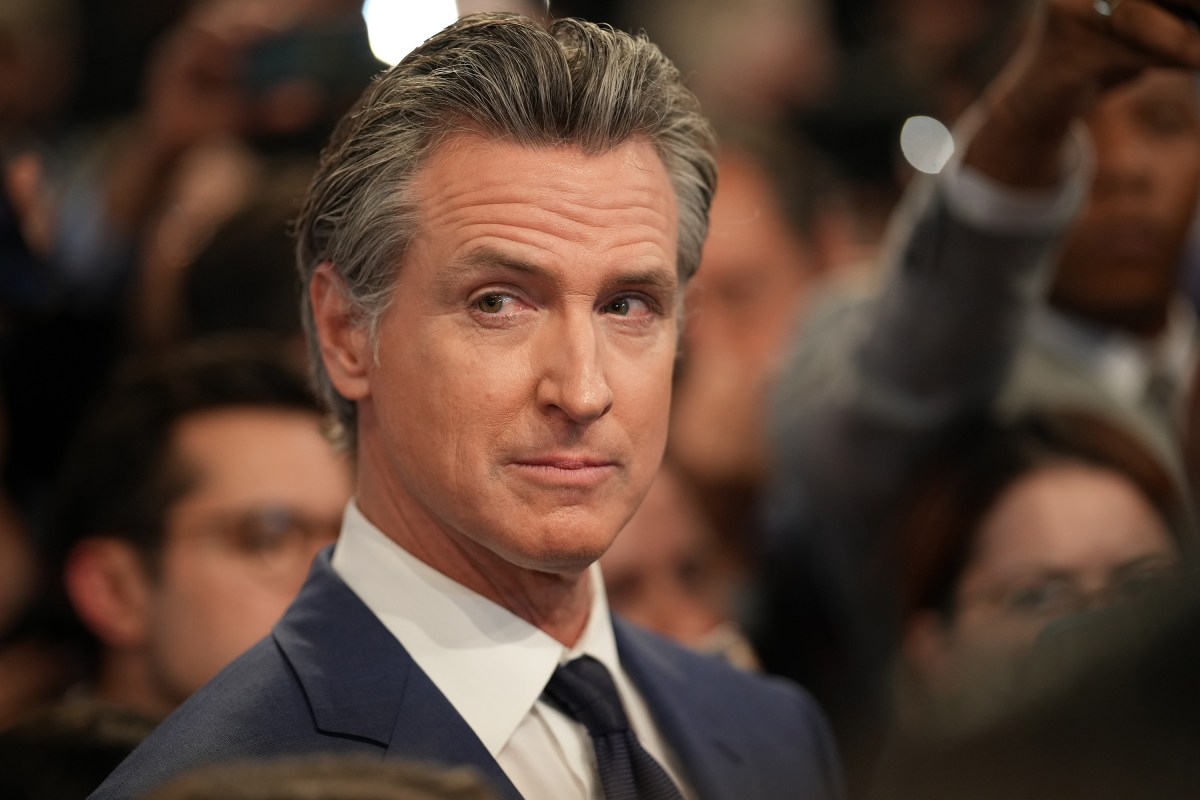

In late September 2025 Governor Gavin Newsom signed SB 53, a watershed AI safety law that puts California at the center of AI regulation 2025. The law targets large frontier AI labs, including OpenAI, Anthropic, Meta, and Google DeepMind, and requires public and regulator facing disclosure of safety protocols, AI risk assessment results, and model testing procedures. SB 53 frames AI transparency regulation as an operational requirement rather than a voluntary principle.

Why this matters

SB 53 signals a shift in AI governance California toward concrete AI safety law and enforceable AI accountability standards. By combining disclosure obligations with whistleblower protections and civil penalties the statute creates clear AI compliance requirements for firms building high capability models. The move also highlights the growing issue of state law fragmentation and the need for coordinated implementation guidance from regulators and industry.

Key details

- Targeted entities: The bill applies to major frontier AI labs named in reporting and to others that meet statutory thresholds, focusing on the most capable models and their developers.

- Disclosure requirements: Firms must disclose safety protocols, risk assessments, and testing procedures to regulators and in many cases to the public, balancing transparency with protection of trade secrets.

- Whistleblower protections: Employees who raise safety or misuse concerns receive legal safeguards against retaliation, helping surface issues earlier and strengthen internal reporting channels.

- Enforcement tools: California regulators gain authority to investigate compliance and impose civil penalties for violations, creating practical incentives for AI governance California.

- Policy balance: SB 53 aims to improve AI transparency while preserving innovation and IP where justified. How regulators apply CPPA related rule making will shape operational impact.

Technical terms explained

- Frontier AI: High capability AI models whose behavior is difficult to predict and whose misuse could cause large scale harm. These are the most powerful systems in use today.

- Whistleblower protections: Legal measures that prevent employers from retaliating against workers who report safety concerns, increasing the chance that risks are identified before harm occurs.

- AI risk assessment: Structured reviews that identify potential misuse scenarios, failure modes, and mitigation plans for models and deployment contexts.

Implications and analysis

SB 53 creates immediate obligations and future precedent. Key implications include:

- Compliance and operational impact: Affected companies will need documented safety programs, reproducible testing regimes, and formal reporting workflows. Expect increased compliance costs and new roles for model safety and regulatory reporting.

- Workforce dynamics: Stronger legal protections for employees who report problems may lead to earlier discovery of safety gaps and more investment in internal review boards and escalation paths.

- Innovation versus secrecy: The law attempts to balance transparency with protection of trade secrets. Regulatory guidance will determine how firms meet disclosure requirements while safeguarding IP.

- Precedent setting: California action will likely influence other states and international policy. State level enforcement with civil penalties could accelerate national and multilateral regulatory responses.

- Public trust: Greater transparency about testing and risk assessment can improve public confidence in AI systems. Conversely, poor compliance risks reputational harm and legal exposure.

Practical steps for organizations

- Audit current model development and testing practices against SB 53 disclosure categories and AI compliance requirements.

- Establish or strengthen whistleblower and employee reporting channels with legal review to ensure protections are robust.

- Create a public and regulator facing disclosure roadmap that balances transparency with legitimate trade secrets.

- Track CPPA rule making and California regulator guidance to anticipate enforcement expectations and timelines.

- Invest in reproducible AI risk assessment processes and documentation to support audits and inquiries.

Conclusion

SB 53 marks a new era of AI safety law focused on transparency, worker safeguards, and enforceability. For companies building powerful models the message is clear: technical safeguards and clear documentation are now core elements of compliance. Organizations should treat this as an AI compliance guide and begin operationalizing safety practices now, since how SB 53 is implemented will shape AI governance well beyond California.