California signed SB 53, the first US law to require frontier AI labs to disclose safety protocols, report critical incidents to the Office of Emergency Services, and guarantee whistleblower protections. The measure raises AI safety standards and establishes clear AI compliance requirements for major developers.

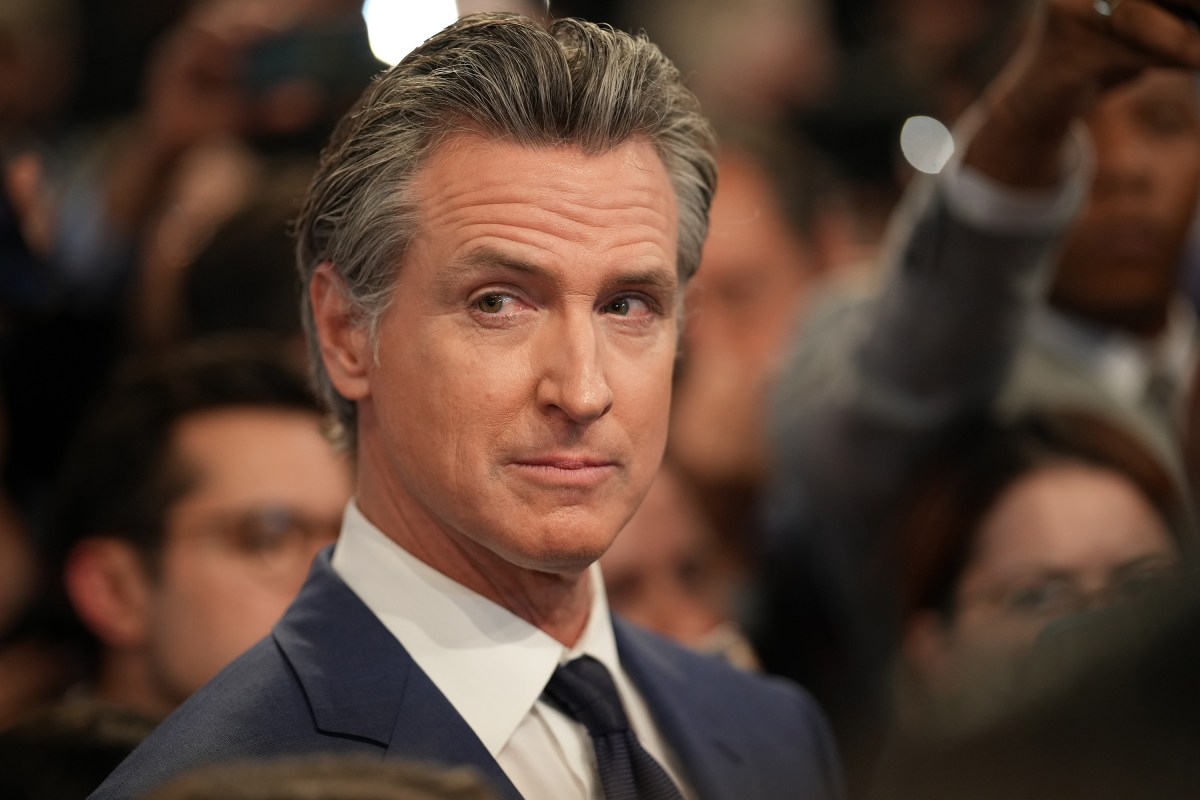

California has moved from guidance to rule making. On September 29, 2025, Governor Gavin Newsom signed SB 53, a landmark law in AI regulation 2025 that establishes new AI safety standards and AI compliance requirements. The bill requires transparent AI governance from frontier AI labs such as OpenAI, Anthropic, Meta, and Google DeepMind. Covered developers must disclose safety protocols, create AI audit trails, report critical incidents to the California Office of Emergency Services, and extend whistleblower protections to employees who raise safety concerns.

Policymakers and advocacy groups argued that voluntary disclosures were uneven and that highly capable systems create systemic risks at scale. SB 53 responds by setting a statutory baseline for AI transparency policy and incident reporting. The law targets the most powerful systems, often called frontier AI, and seeks to make risk management practices auditable and verifiable for regulators and researchers.

SB 53 creates immediate compliance work for large developers. Firms will need to expand safety governance, maintain AI audit trails, and develop reporting workflows. While this raises operational costs, it also offers clearer legal expectations so companies can align practices with AI safety standards and transparent AI governance best practices.

Whistleblower protections may change internal dynamics, making employees more likely to report concerns and surfacing risks earlier. Companies will need to balance transparency with protecting proprietary methods. Effective implementation will require clear rules about what to disclose publicly while sharing sensitive technical details confidentially with regulators.

California often influences national policy. SB 53 could shape future AI regulation in other states and at the federal level and may push industry standards bodies to update guidance on responsible AI implementation. Organizations that rely on third party AI services should reassess vendor risk and expect more formal safety attestations from major providers.

Observers should track how firms operationalize disclosures, how the Office of Emergency Services handles technical incident reports, and whether other jurisdictions adopt similar mandates. The law was reported by TechCrunch and signals a new era where safety must be demonstrable and auditable rather than aspirational.

Bottom line: SB 53 raises the bar for AI safety and transparency, creating clear AI compliance requirements for frontier developers and encouraging a shift toward verifiable responsible AI implementation.